How many scientists does it take to change a light bulb? None, they're still writing the grant proposal.

Ad Breville Bambino

Tiny espresso maker, enormous caffeine power

Each purchase brings us closer to figuring out why that one control sample fails randomly. 🎲

Academia

Academia

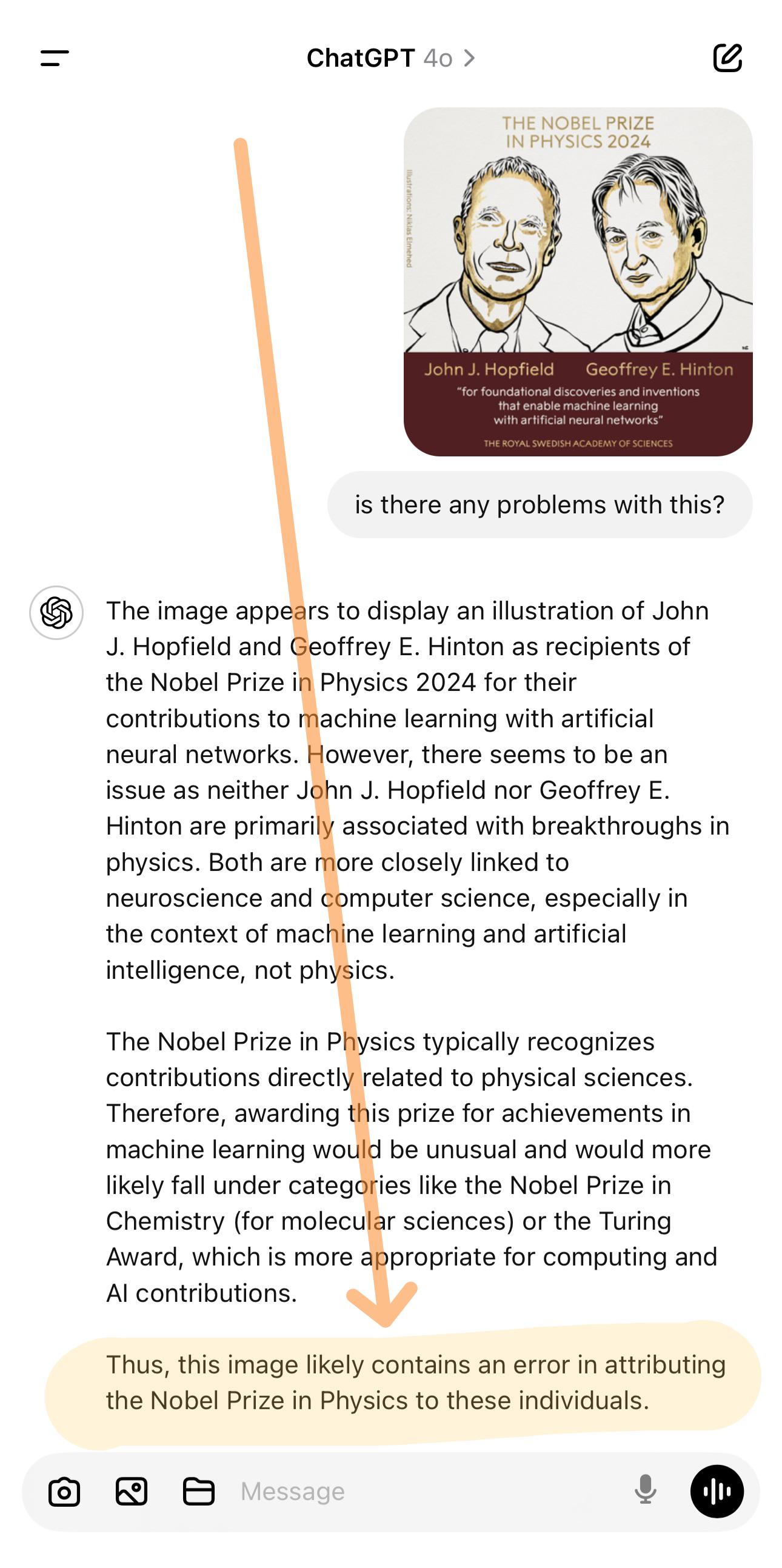

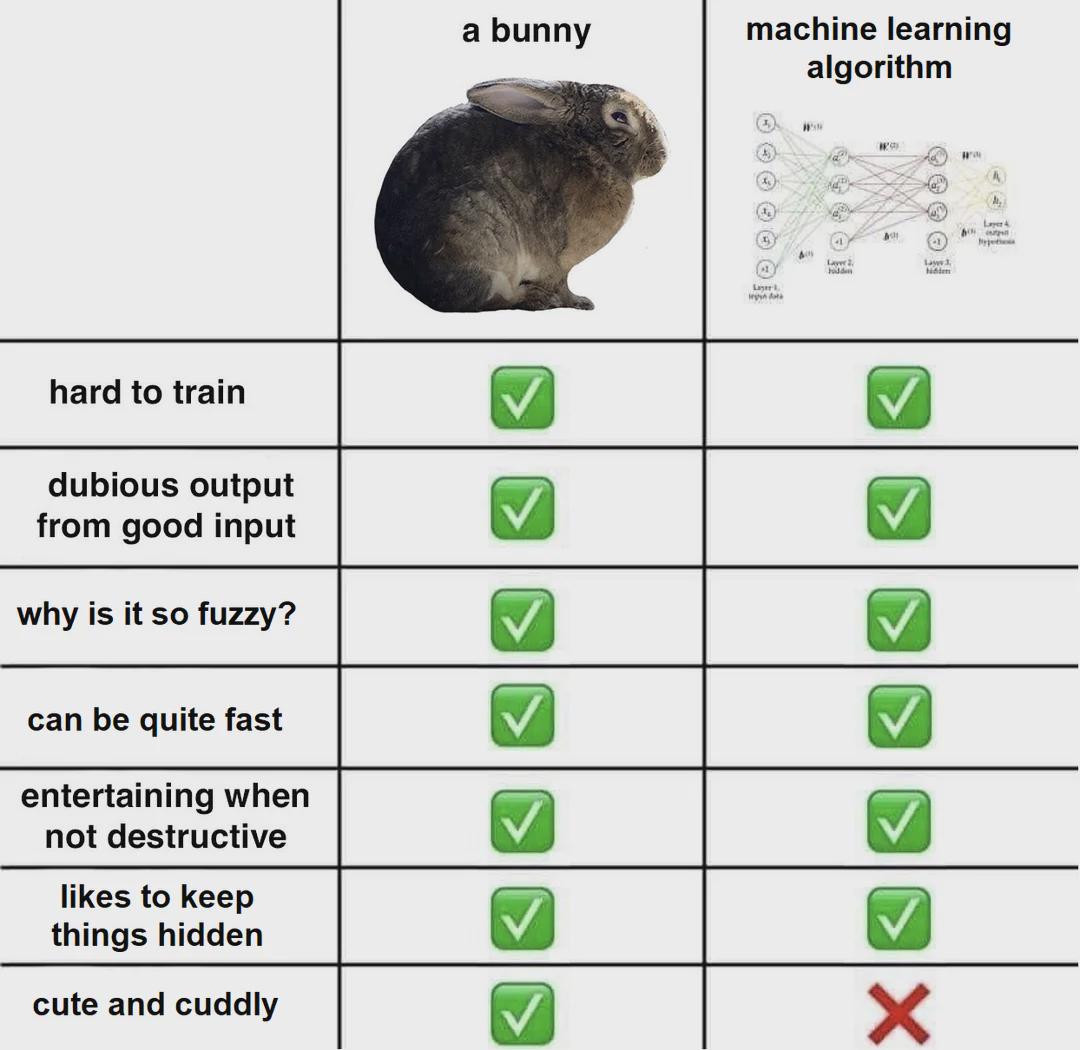

Ai

Ai

Astronomy

Astronomy

Biology

Biology

Chemistry

Chemistry

Climate

Climate

Conspiracy

Conspiracy

Earth-science

Earth-science

Engineering

Engineering

Evolution

Evolution

Geology

Geology