The ultimate dad joke about AI! Kid asks an innocent question about AI slope, and dad unleashes a mathematical tsunami that would make even neural network researchers sweat. First, he drops the attention mechanism formula (that's the fancy e^(stuff)/sum(e^(stuff)) equation), then proceeds to bombard the poor child with feed-forward neural networks, encoder-decoder architecture, and what looks like enough Greek symbols to make Pythagoras cry. The kid's response is priceless - the universal "I should've known better than to ask" realization that hits when you accidentally trigger a nerd's special interest. That's not just math, that's weaponized mathematics!

Academia

Academia

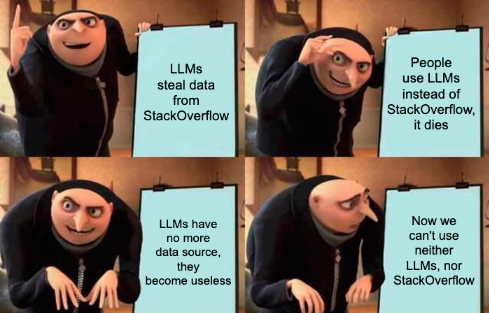

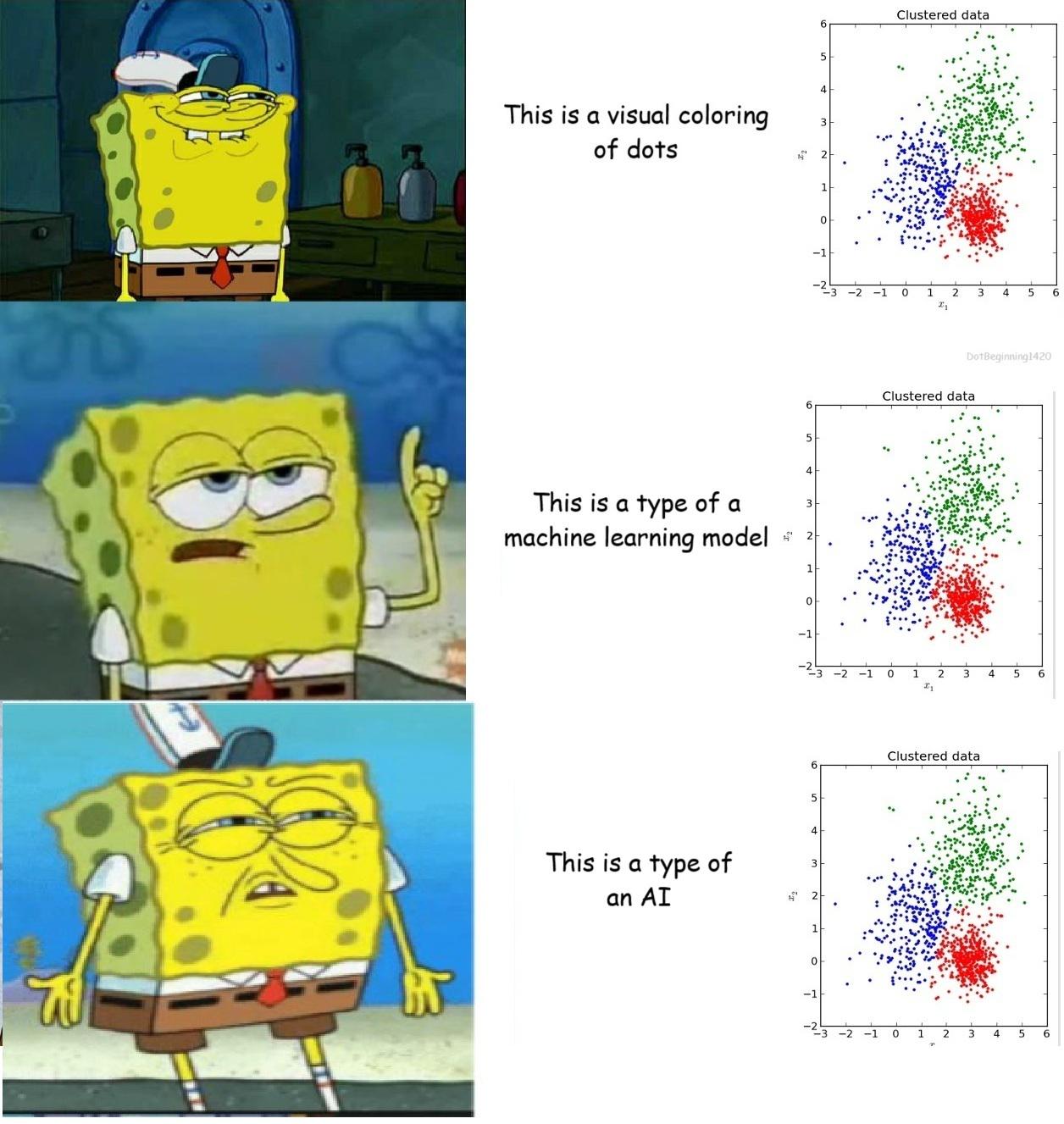

Ai

Ai

Astronomy

Astronomy

Biology

Biology

Chemistry

Chemistry

Climate

Climate

Conspiracy

Conspiracy

Earth-science

Earth-science

Engineering

Engineering

Evolution

Evolution

Geology

Geology