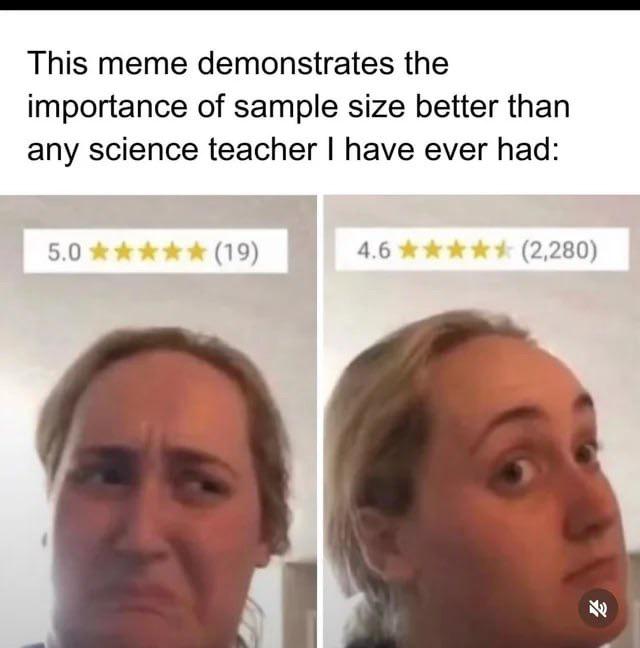

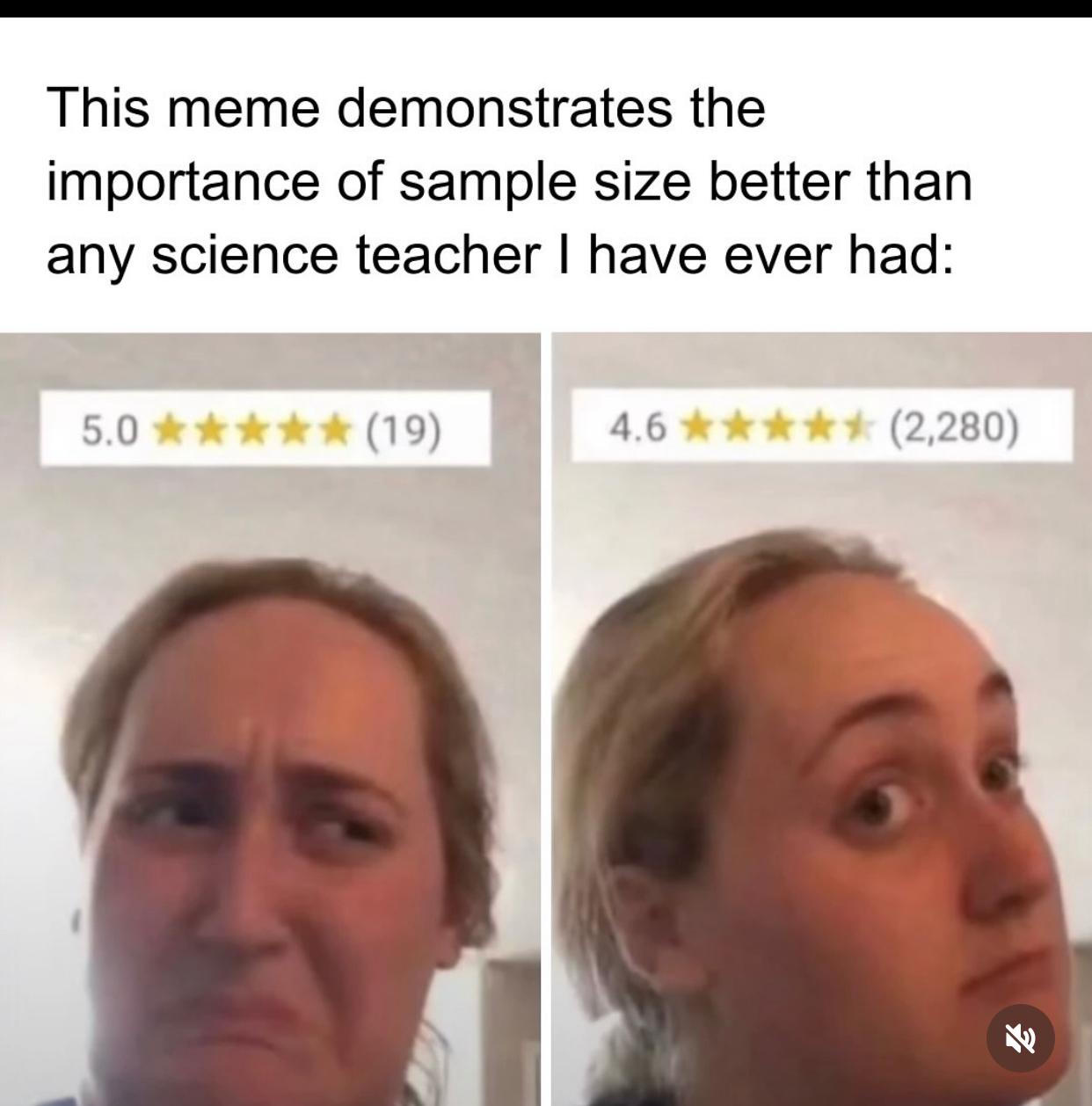

Behold! The perfect visual proof of why statisticians get twitchy about small sample sizes! On the left, a perfect 5-star rating based on a measly 19 reviews. On the right, a slightly lower 4.6 stars but with a whopping 2,280 reviews. Which would you trust? The second one, obviously! That first rating is like claiming you've discovered the perfect diet because you tried it on your pet goldfish and he looked happier. Statistics in the wild - more revealing than my lab coat after taco Tuesday! Remember kids, a small n value is just an anecdote wearing a fancy mathematical hat!

Academia

Academia

Ai

Ai

Astronomy

Astronomy

Biology

Biology

Chemistry

Chemistry

Climate

Climate

Conspiracy

Conspiracy

Earth-science

Earth-science

Engineering

Engineering

Evolution

Evolution

Geology

Geology