Poor John von Neumann, just chilling at the bottom of the scientific recognition pool while Einstein gets all the high-fives from pop culture. Tesla's drowning somewhere in between—occasionally remembered for electric cars rather than his actual work. Meanwhile, von Neumann casually invented modern computing architecture, game theory, and contributed to the Manhattan Project while being so intellectually intimidating that other geniuses felt like children around him. But hey, no biopic or trendy t-shirts for you, John!

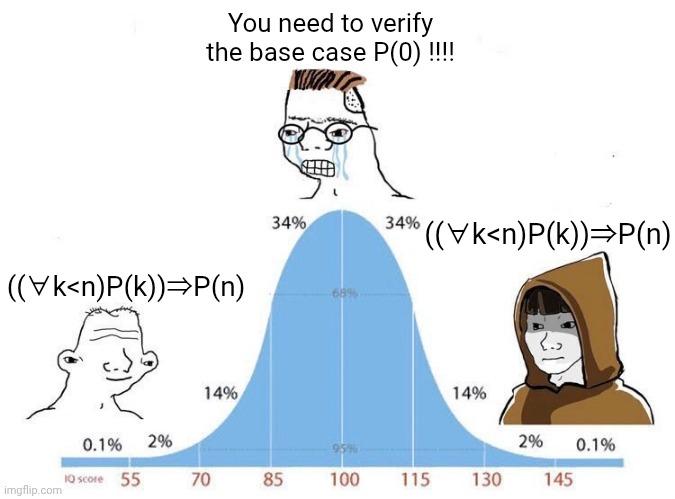

Academia

Academia

Ai

Ai

Astronomy

Astronomy

Biology

Biology

Chemistry

Chemistry

Climate

Climate

Conspiracy

Conspiracy

Earth-science

Earth-science

Engineering

Engineering

Evolution

Evolution

Geology

Geology